My final thoughts on this semester’s experimental MoCap study, techniques I’ve learnt and issues I’ve dealt with upon completion of the project.

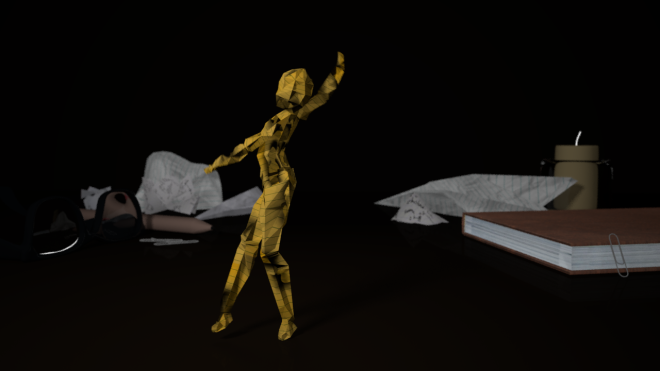

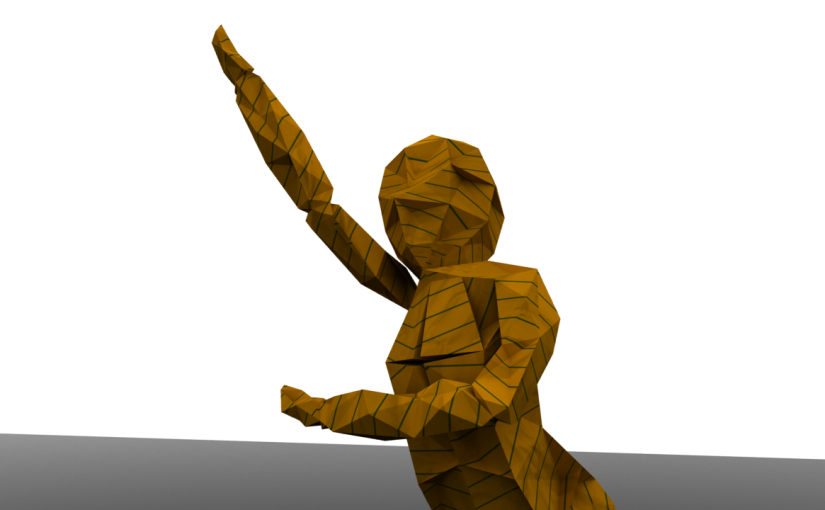

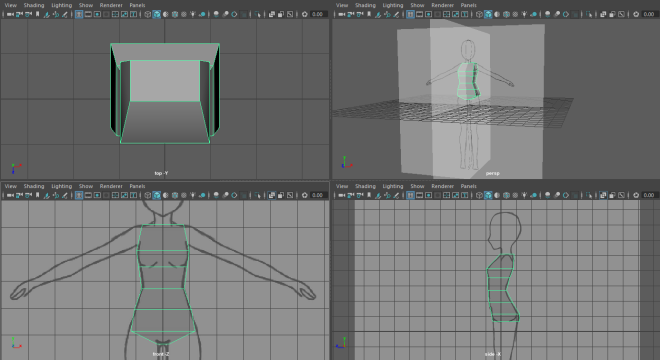

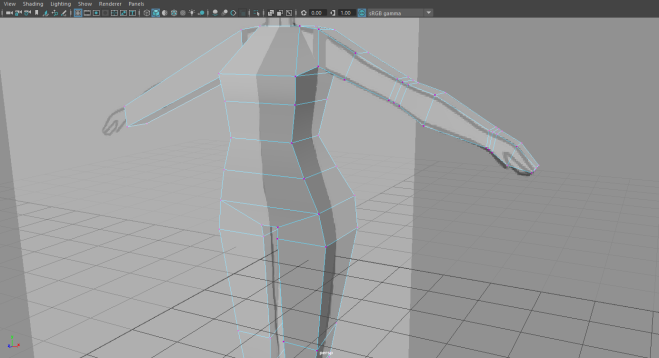

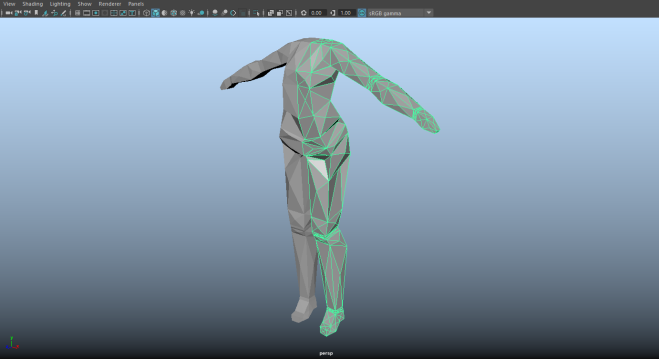

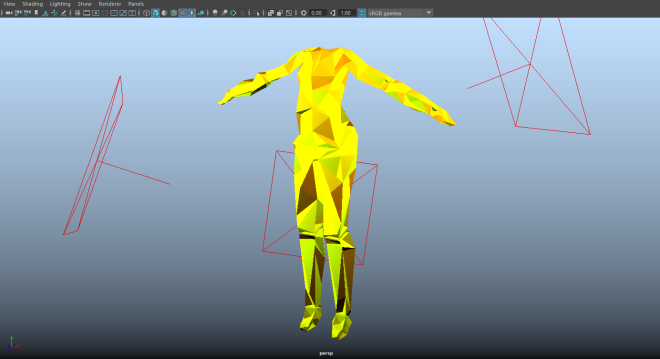

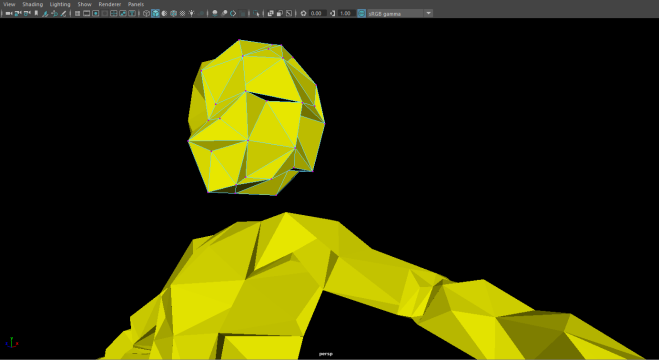

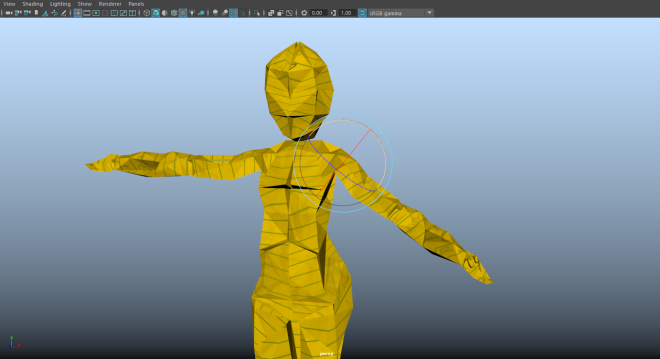

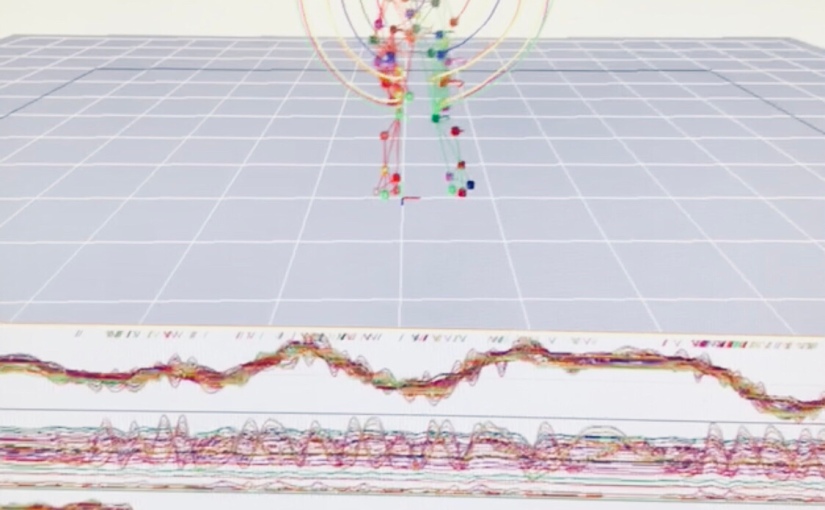

Character Modelling and Rigging. I’ve had numerous experiences with this process from past work, and it is always a breath of fresh air to find newer and more efficient ways to get them done. The Auto-Rig feature I discovered is definitely one of my highlights, and something I wish I knew in Year 1.

Data Clean-up. I found the clean-up process to be the second most frustrating this semester, but the sheer lack of glitches and errors in the base animation is what really paid off the effort I put into Cortex, and I will be keeping in mind the lessons learned in that stressful experience. I was fortunate enough to have finished everything before the suite got even more in demand and harder to book. That’s another note: allocating enough time to work on this especially crucial part of the project.

Motion Builder-Maya Pipeline. Apart from what I’ve already discussed about the failed rig prototypes, this is what I found the quickest and easiest to do. The tutorials provided are still pretty straightforward to follow and with them I didn’t come across any more obstacles with my character. This success of course points right back to how well the data is cleaned up and how relatively early I managed to finish it, giving me at least a week of head start to the rest of the tasks.

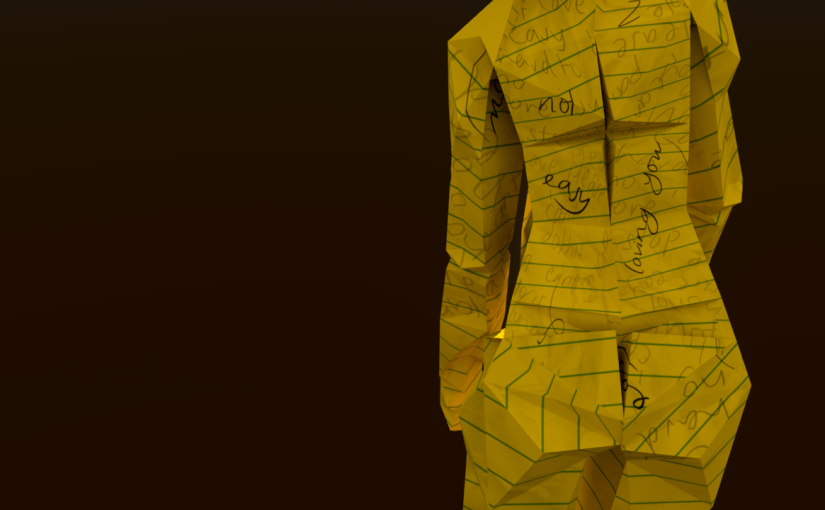

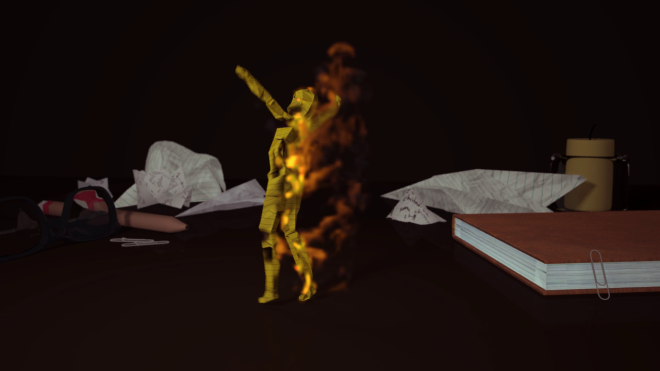

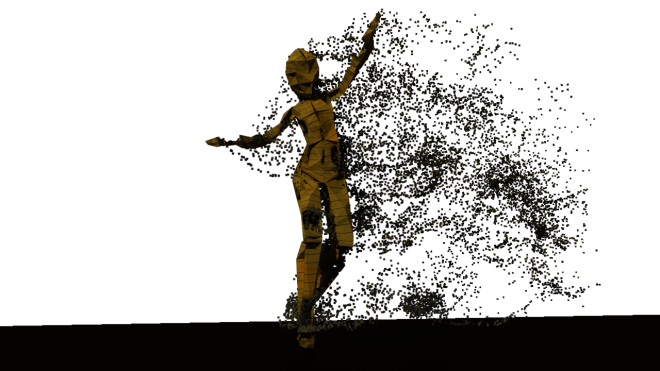

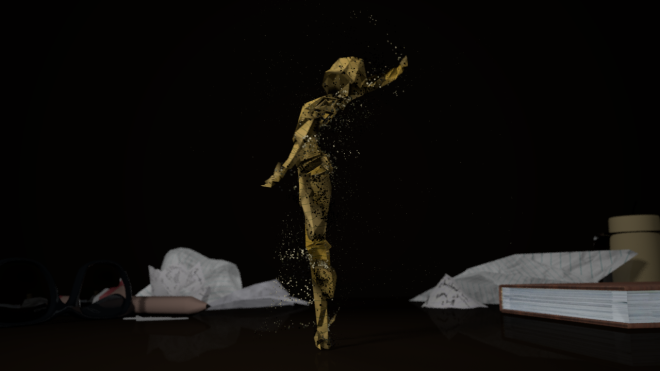

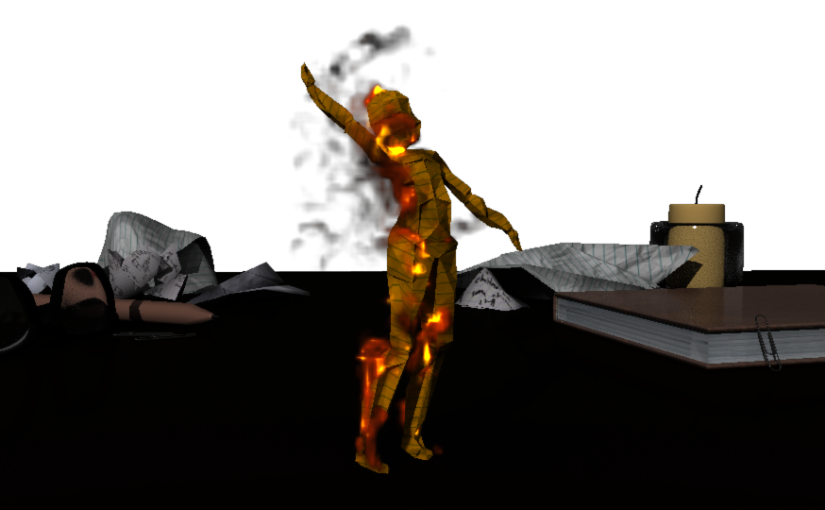

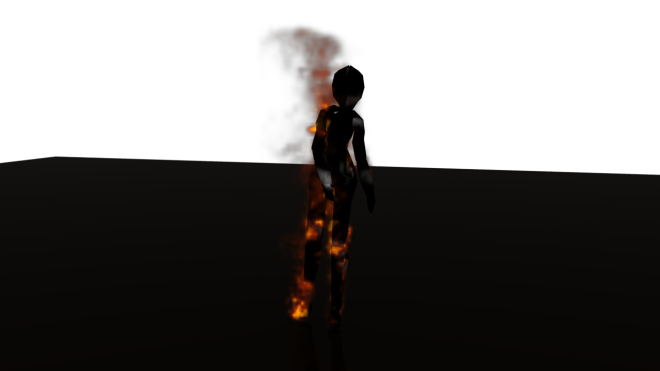

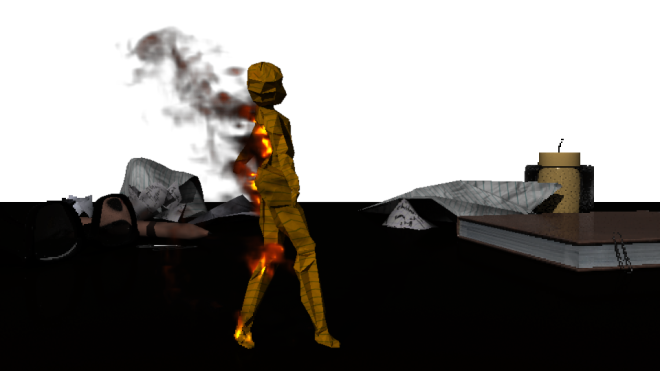

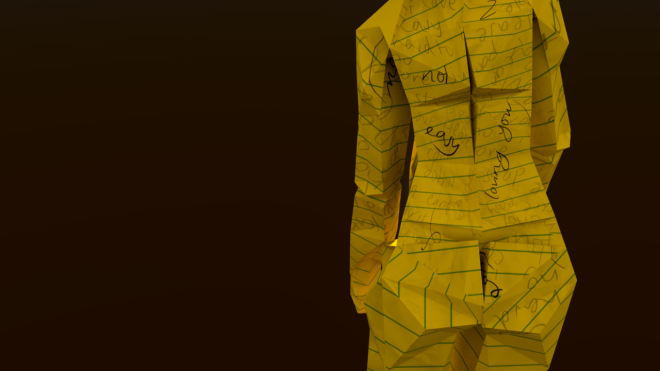

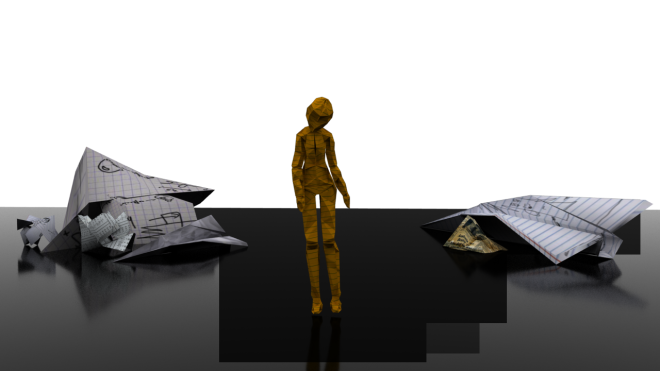

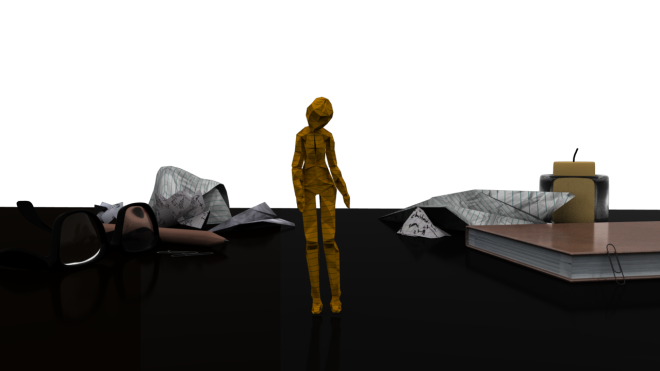

Maya FX Exploration. Since I had heaps of time to experiment, I went all out on all of my other concepts especially the fire and disintegration (ashes), and ended up using a combination of both for my final. I worked with playblasts of all my camera shots on Premiere Pro first to better visualise my sequence, and for the same reasons of allocating enough time to polish my work, knowing full well how time-consuming rendering would be. Which brings me to:

Rendering. It was fulfilling to have had my project carefully planned for once before I jumped into rendering my shots. Based on past experience, I struggled the most at the render stage due to relatively poor time management skills. But thanks to the existence of Redshift and the playbasted drafts of my sequence this time around, I was able to work out which frames were crucial to be rendered for certain camera shots, saving me the time, effort and regret had I rendered more than what was needed. The part I was most frustrated about was when I found out that Redshift was rejecting my fire simulation, and above all, when every solution I’ve ever looked up felt hopeless. The Green-screen idea was definitely a Eureka moment and hence my biggest highlight of the project process.

Video Polish (the fun part!). I thought my fire sim, due to it being rendered in Arnold and at low quality settings, looked pretty pixelated, and my attempt to optimise its appearance on After Effects via the Camera Blur and Glow effect, is what made all the difference. Editing has always been a forte, and I found compiling the shots for both my final and Work-In progress video enjoyable, as the feeling of having to rush through it was non-existent in this project. I compiled all of the shots on After Effects before sending my composition to Premiere Pro, where I added the necessary titles as well as a hint of maroon in the colour corrected adjustment layer to accentuate the fire and have it blend seamlessly with the backdrop.

Overall, I’m very happy looking back at how much I’ve learnt through this paper. I was paranoid to run out of time so I only rendered the final at 720p (though that’s the required ratio), and I might consider rendering out a 1080p version in the future, once I have better understanding of Redshift and able to work out the best settings for a higher quality render. Besides my OCD triggers, I am pleased with the outcomes and can say that it’s lived up to the vision I had in my head when I made its first conceptual art, and I still stand by my 10/10 rating and full recommendation on Redshift, without which I would not have pulled through.