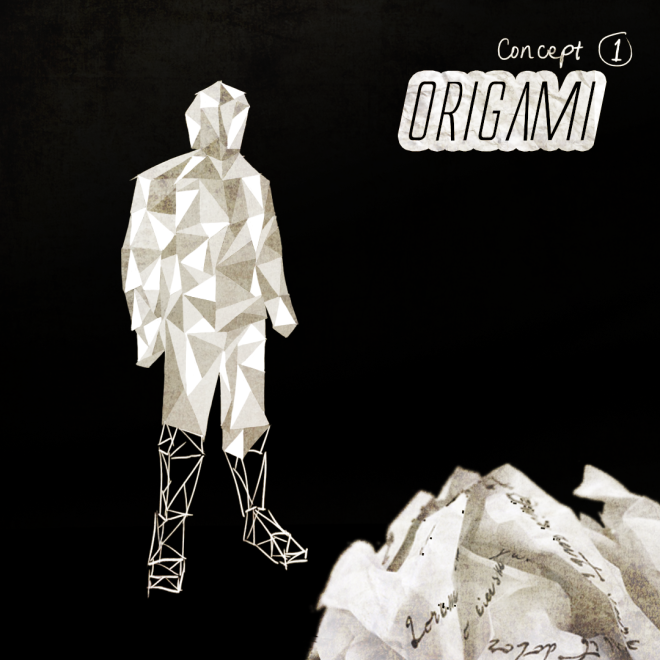

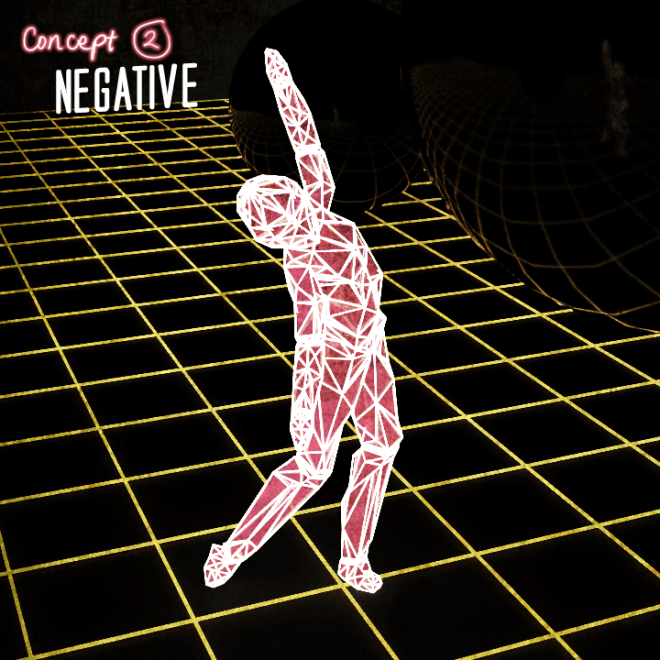

After much consideration, I decided to just craft a mesh that entirely mimics the look of an Origami as opposed to my initial idea of combining a wireframe / toon outlines look with it, as this will prove distracting and inconsistent with the mood I wanted to go for: that being a melancholic sequence that becomes a visual metaphor for ‘love and death’ (mainly the death aspect). As I go through with the 3D model, I will be integrating screenshots to my process for more clarity.

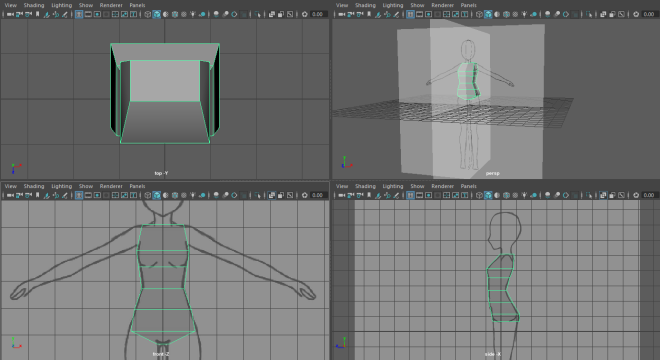

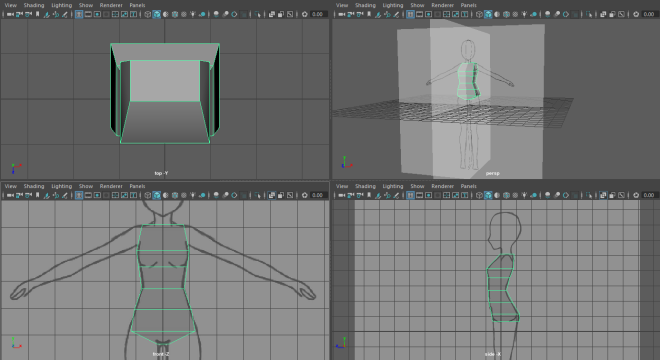

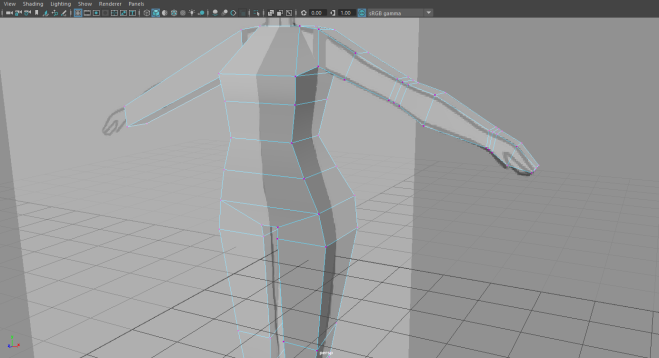

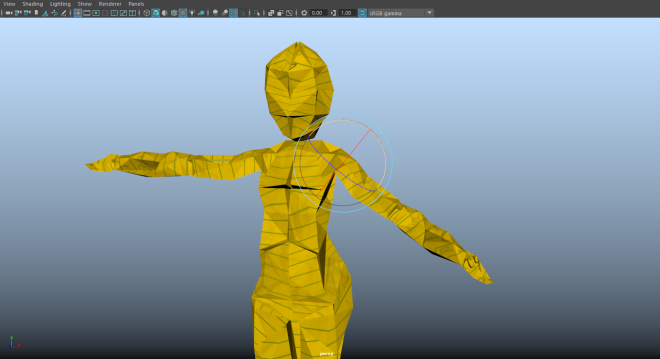

The early process involved importing reference images as planes onto the Orthographic front and side views of the Maya viewport, solely for modelling guidelines. I then began to mold the character from a primitive cube, adding new edge loops and adjusting the vertices to follow the shape of the reference image accordingly.

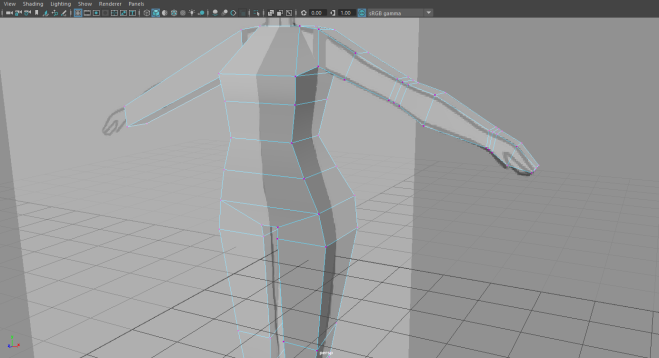

I began by first shaping the torso before making my way out to the arms and legs (extruded from the top and bottom sides). An efficient method in ensuring the mesh is symmetrical, (saving me the efforts of having to work on one side of the body at a time) is Maya’s Duplicate Special, a feature that essentially mirrors half of your mesh with instant feedbacks.

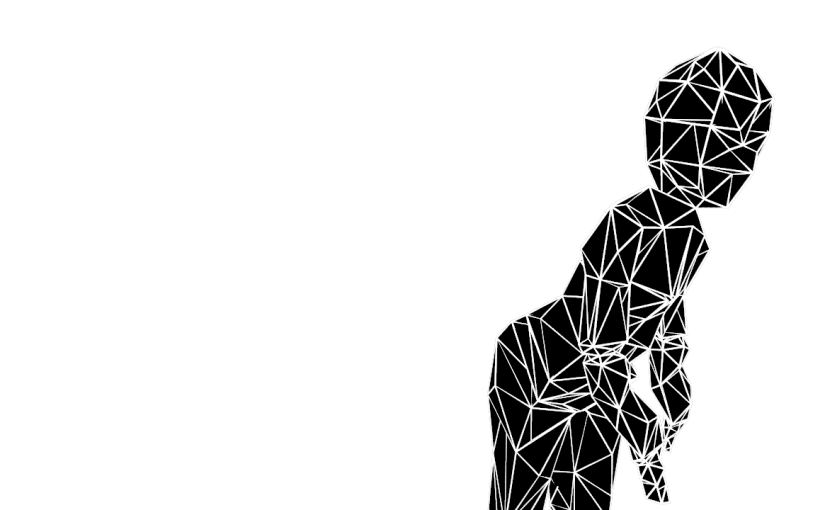

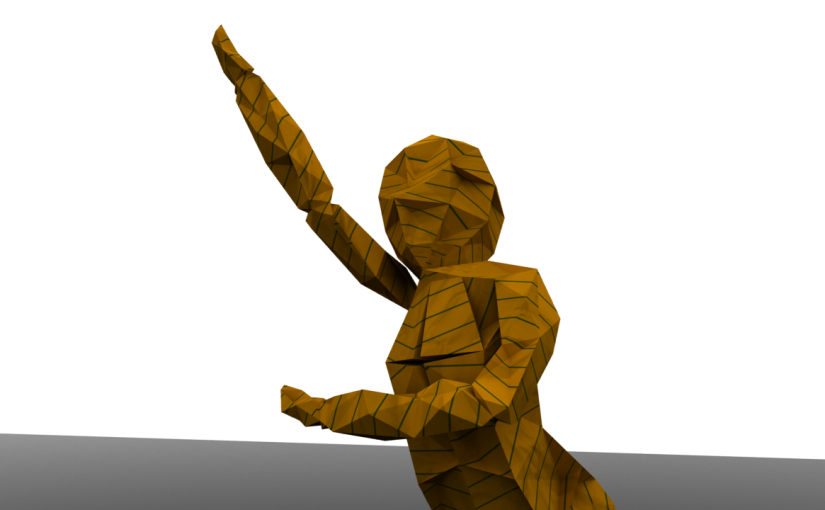

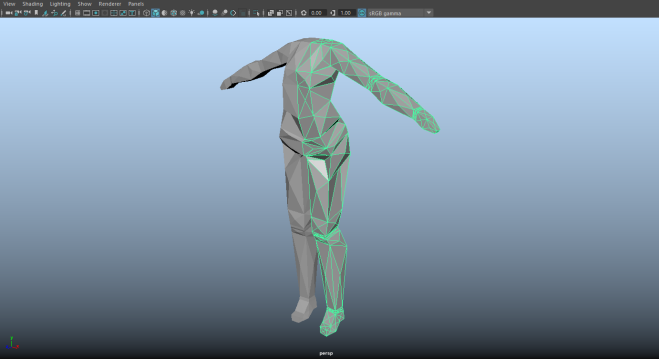

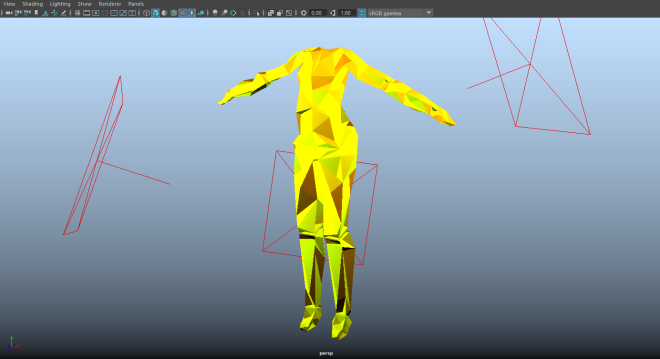

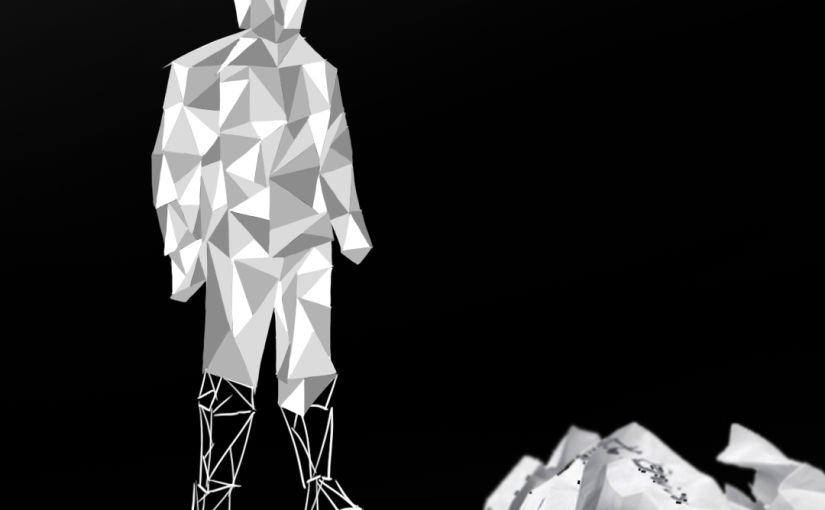

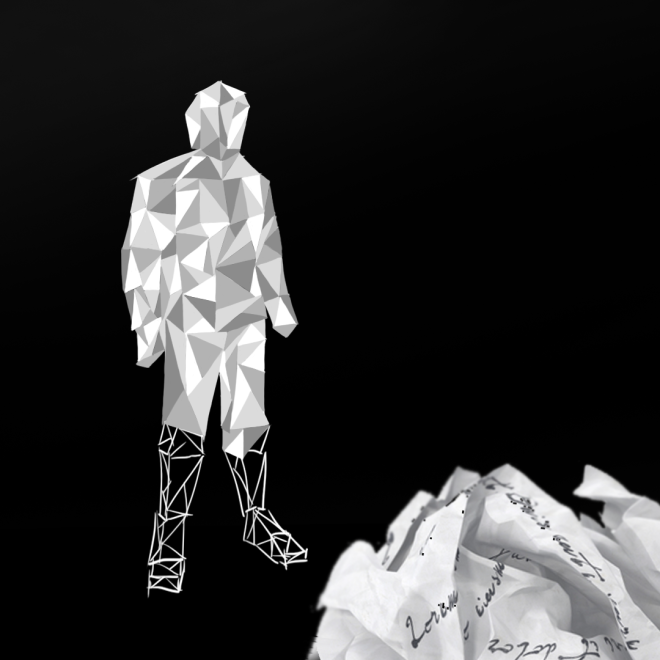

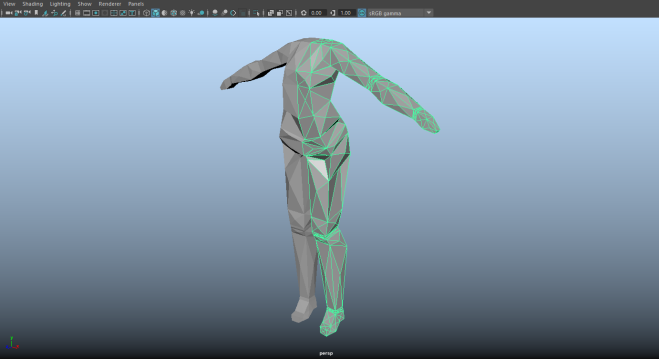

By the time the model started to look more like a body, I wanted to give my character a more polygonal look to create the folds in the paper, so what I did was subdivided the faces of the mesh into triangles (as shown in the screenshot above). With these new vertices I am able to shape the model in a way that makes it look a lot less blocky.

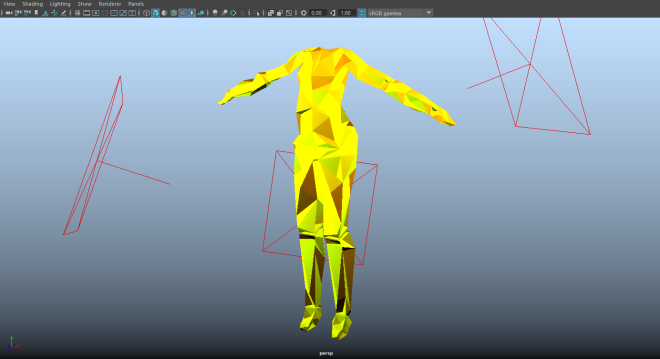

I also added lights in the scene so I could tell which areas needed more emphasis. This is the result:

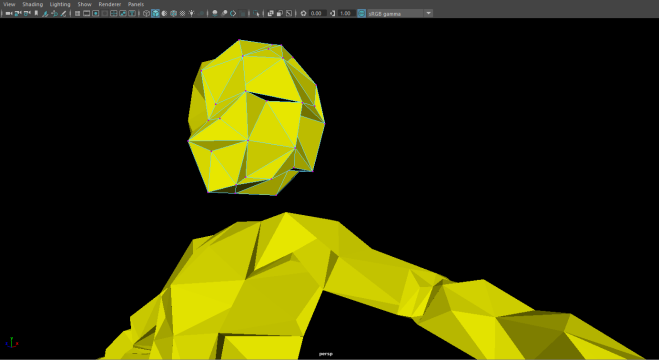

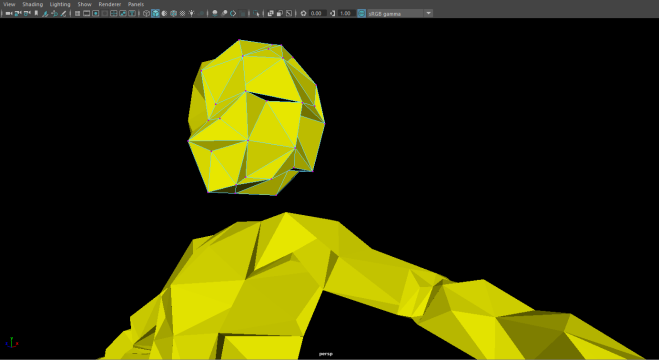

The same process is applied to the head. As opposed to the cube, I used a primitive sphere to make the shaping a little easier. I connected the head to the body once I was happy with the outcome, deleted the Duplicate Special and mirrored the 1/2 before combining them into one whole mesh.

The next step was rigging a skeleton for the model, followed by Weight Painting or assigning which parts of the mesh goes with which joint in the skeleton. At first I went through a similar process as my 3D game character last year – a brief overview of that can be found in this post. While this rigging method worked for a moment, further testing later had me struggling to create a fully functional control rig that is compatible with my capture data (as well as the MotionBuilder actor I would later use to retarget it with). Somehow the effectors I’ve assigned on the joints weren’t doing their job. With little patience to figure out how let alone what to fix, I ended up using the Auto-Rig feature on Maya instead, which I found to be relatively more efficient and just as customiseable as the rig I made previously.

Auto-Rig gave me the option of manually setting up the guides in positioning the joints. They were also properly labelled, making it easier to tell where to place them in my character’s anatomy. As soon as I finished this step, the control rig (with detailed control references visible in the outliner) was made in just one click. The Weight Painting was close to perfect, I had to readjust a few minor deformation errors, but I did find this to be more effortless compared to when I made the rig from scratch.

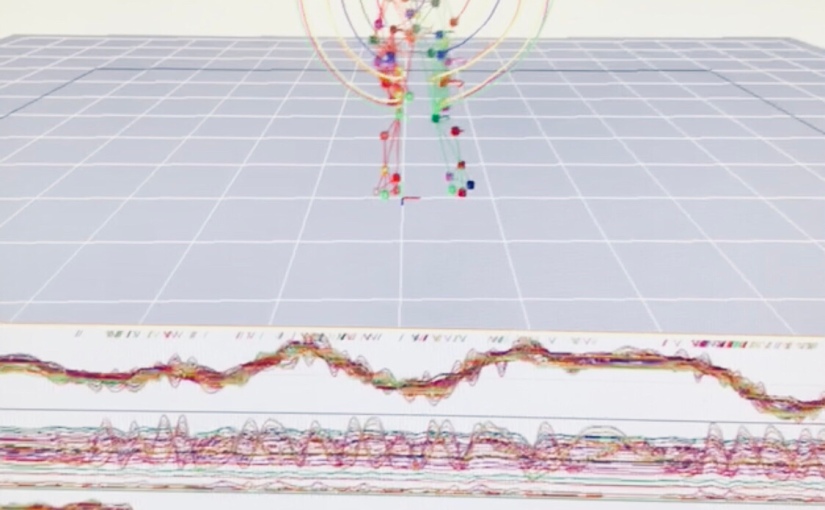

After this, I was finally able to test this new rig out on my capture data in MotionBuilder without experiencing further issues, which was a huge sigh of relief.

I then followed the Motion Capture Pipeline tutorials provided to the class, to retarget my data until it’s moving well with the mesh, later baking it and adding extra animation layers to manually refine the movement, especially in areas not affected by MoCap such as that of the foot and the wrist. I used the video we recorded at our session as reference for this task. (see Capture session post)

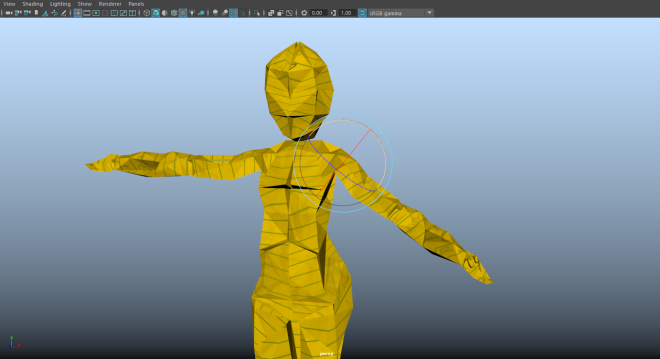

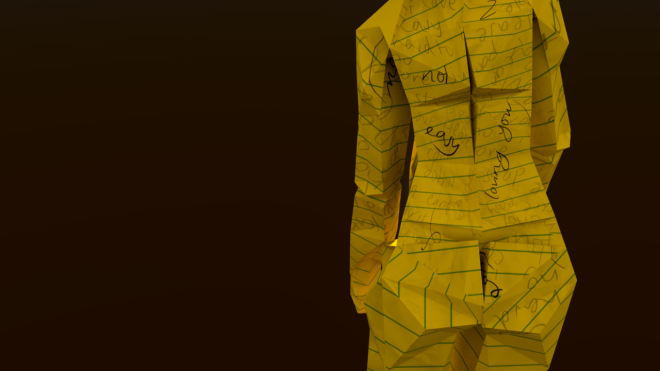

Since I’ll be adding more FX, I fixed the textures and UVs last without baking them into the mesh just to keep my options wide in terms of the final look:

Final Character mesh

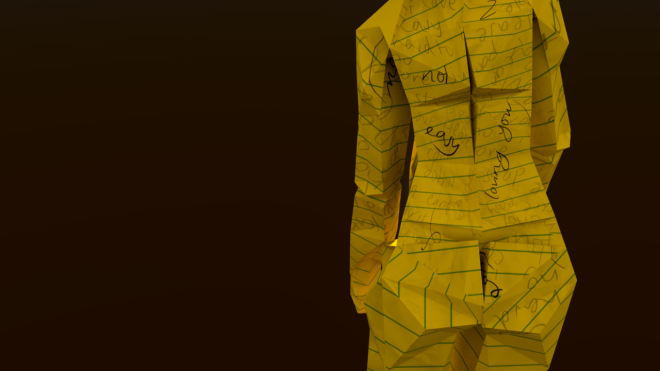

Textured look (Arnold Render)

The reason for the yellow pad-paper texture (made in Photoshop) was not only to mimic the look of an origami but also to emphasise the paper folds through the refill lines; I found it hard to make them noticeable with just a plain colour. For the extra detail I also added the following scribbles:

The words “loving you, not easy” echoes the lyrics of the song I intend to use in the sequence. (The free track, Reaching the Summit VIP Remix – Andy Leech & Justin Jet Zorbas, with permission to use and modify for educational purposes)

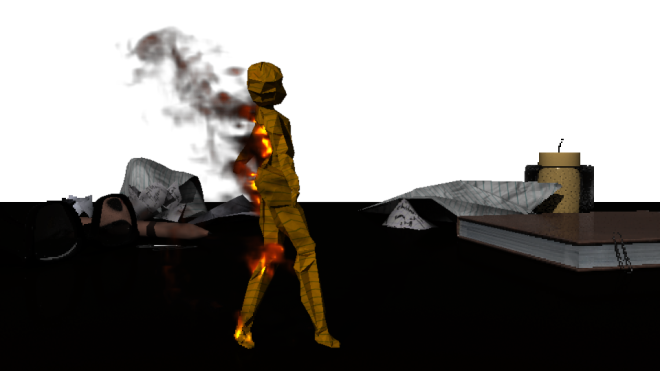

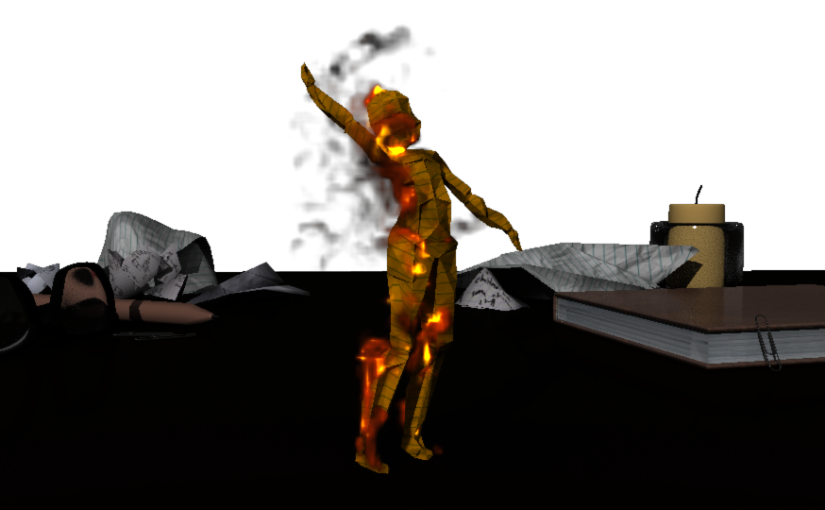

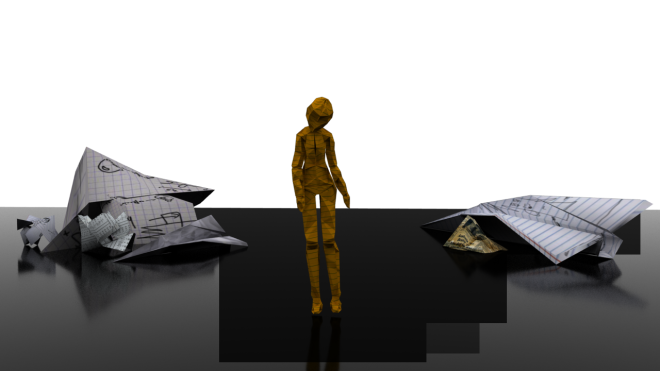

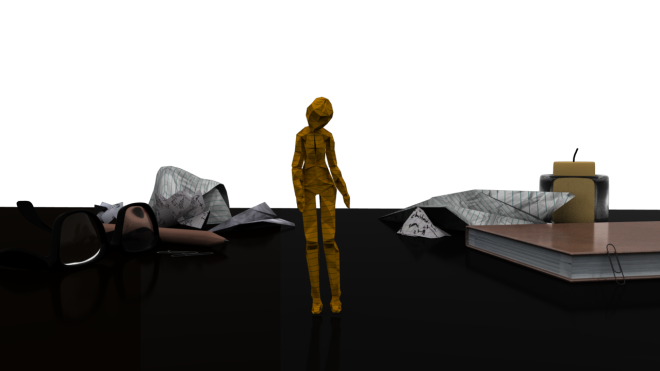

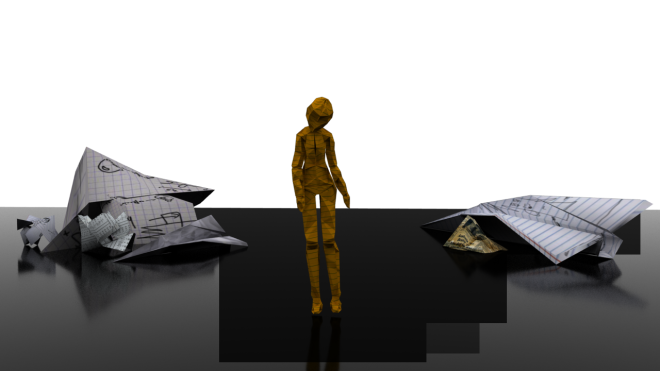

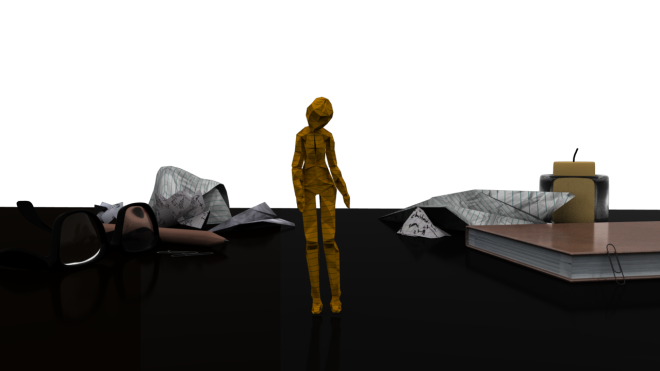

Ambience composition

Other than filling up the empty backdrop of my scene to make it aesthetically pleasing, I wanted to give a sense of familiarity in the environment, having it resemble a student’s desk, but not so much to a point where the focus is taken away from the Origami character. (Note: assets in the back are free and downloaded from TurboSquid.)